Nothing Is Real Anymore. But That Didn’t Start With AI.

Navigating illusion, trust and the work of being human in an age of Generative AI

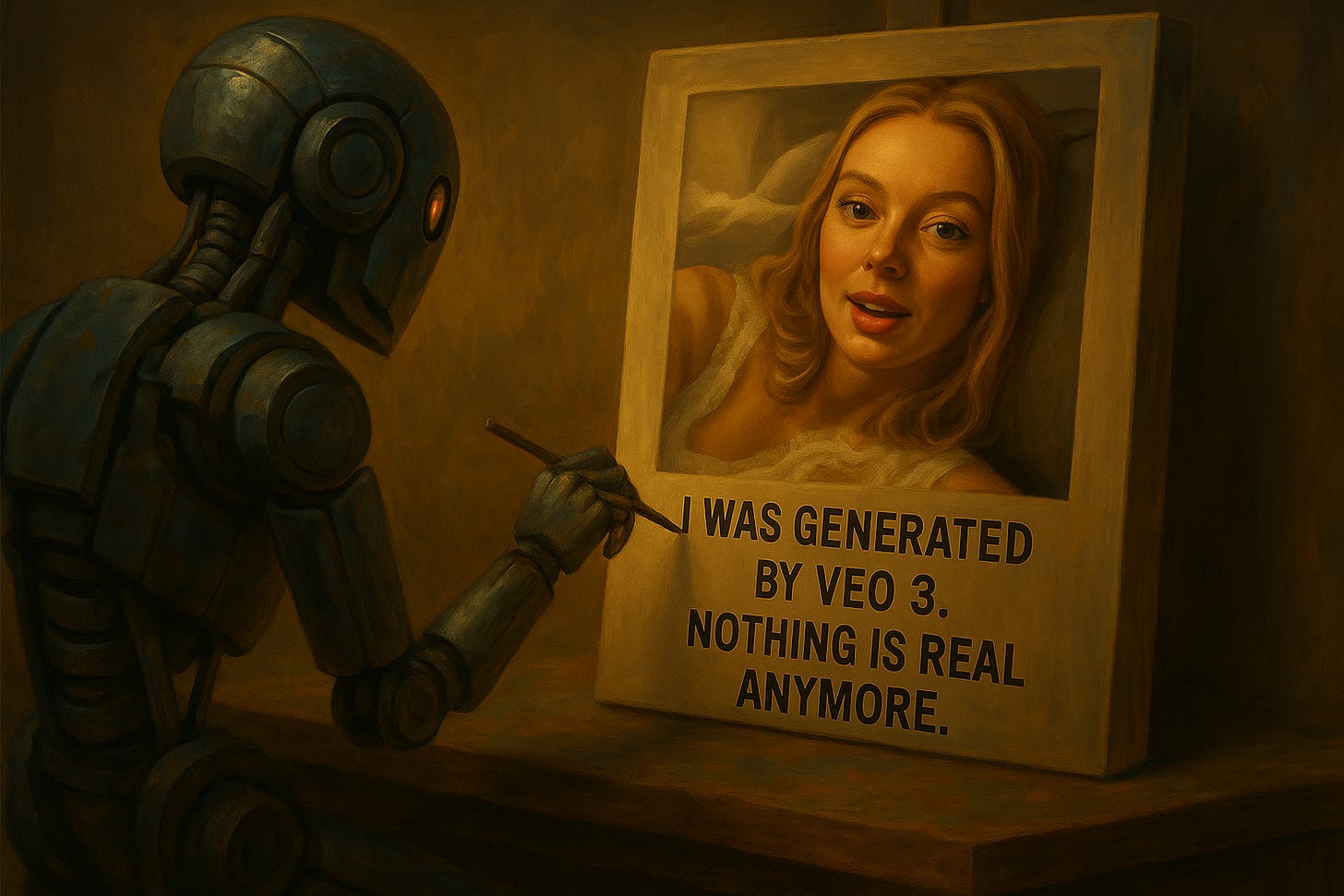

“I am AI generated by Veo3. Nothing is real anymore.”

Thus spake the digitally rendered woman, lying in bed, declaring herself Exhibit A in the supposed collapse of our ability to distinguish fact from facsimile. The clip, reposted by @FutureStacked on X (source), came with the usual existential tailspin:

"We are cooked." "How will we know what’s real anymore?"

I blinked at the screen. Then I looked up and around me.

The warm air of my exhale brushed my upper lip. A car hissed down the street, playing a strange countermelody over Zeppelin blaring from my speakers. The AC coughed out a dry chill onto my legs. My phone buzzed with a text from one of my best friends, the kind who makes me laugh until it hurts.

It was an average, unremarkable, but fully-lived moment, and none of it was at risk from the talking head on my screen.

Huzzah! Reality will live to see another day.

Still, something in me tensed up. Not at the realism of this deepfake, but at the implications swirling around it. Because the subject wasn’t just announcing her origin. She was making a claim: we won't be able to trust what we see online anymore.

But before we descend into mass hysteria, storing canned beans in our underground bunkers, let’s remember: this didn’t start with AI.

We’ve been here before

Let’s be real, media illusion is not new. Cinema, advertising, even journalism have long engineered perception: through narrative, camera angles and Photoshop. And yet, we’ve learned to interpret with discernment. We’ve accepted that the burger never looks like its all-beef counterpart in the photo. We resign to the fact that the actors aren’t actually flying through space at hyper-speed. (But we can dream, can’t we?)

Never mind the years I spent working for those perfect photoshopped Men’s Health abs. But I digress…

Then came the social network. *cue the collective exhale of authenticity*

At first, it felt like the antidote: messy brunch photos, blurry concert clips, typos, filters, and way, waaay too much information. It felt like real life.

But eventually, social networking became social media. Friends became followers. What was once truly candid became curated, whether performed or sincere; and sometimes brilliantly so. We adapted, again.

And now, a GenAI whispers from the edge of the bed as if delivering the mic drop announcement of our generation: “from this point forward, ‘nothing is real.’”

I think she was late to the party.

Yes, it’s a huge leap where neither a human nor a camera are required to quickly produce photorealistic cinema. But the panic reveals something deeper. Maybe it’s not just the tools we fear. I think it’s shedding a light on how easily we’ve trusted appearances all along. Maybe the real threat isn’t that AI might fool us, but that we’ve built entire systems that reward surface over substance.

So the question becomes: what can we actually trust?

The crisis beneath the content

The stakes have escalated. These tools won’t exist just to remix cat videos, sell mushroom coffee, and promote wellness confessions. They can fabricate eyewitness accounts, fake bodycam footage, simulate political confessions.

When media can be generated faster than it can be validated, the burden of proof shifts, where the public is left without tools to do this validation. As a result, the ramifications could be dire.

Yes, companies are making some efforts: Meta’s watermarking, Microsoft’s content credentials, startups building detection models, like Reality Defender, Hive, and Deepware. But most solutions are gated, proprietary, or controlled by the same forces scaling the problem.

At the time of this writing, C2PA (Coalition for Content Provenance and Authenticity) is working to establish a technical standard for certifying the origin and history of digital content. Companies like Adobe, Microsoft, and the BBC are part of the initiative, and Adobe has started rolling out Content Credentials in some of its tools.

However, we still don’t yet have a shared protocol for source verification. No open standard. No widely-adopted infrastructure to validate content origins. No open fingerprinting systems.

No browser extension for the public.

We audit financial systems. We test software before shipping. Yet we allow “zero-cost” synthetic media, capable of shaping elections, markets, and memory itself, to circulate without provenance. That’s not innovation, that’s systemic negligence.

(For what it’s worth, I ran that original deepfake through Deepware’s free tool. It flagged nothing. So even if you’re paying attention, your tools might not be)**

Embodiment as infrastructure

In 2024, I heard entrepreneur Bo Shao speak in San Francisco in a talk about AI and Wholeness. He said something I haven’t forgotten:

"Foundation models will have all the knowledge in the world. But the wisdom and the intention will have to come from us."

He shared that for most of his life, he thought of himself as just a head being carried around by a body. "I spent most of my life simulating being human," he said.

Yeah... I can relate.

"Probably how AI feels right now."

And therein lies the distinction. And oh boy, does it matter.

If we’re being honest, many of us in technical professions, or those of us who are immersed in screens of various sizes, can probably relate in a major way. We operate from the neck up. Our relationships, our work, even our self-perception can begin to feel disembodied. We lead with cognition; but cognition alone isn’t enough.

And this is the fork in the road. Unlike us, AI doesn’t have a body. It doesn’t feel. It doesn’t contextualize thought through breath, blood-sugar, memory, or heartbreak. It can output text and can brilliantly learn to assign meaning to patterns, but it can’t tune its signal based on real-time stakes; at least not yet.

However… we can and we do!

Our cognition isn’t clean math but it’s a brilliant self revealing and intuitive system, entangled with emotion, shaped by culture, filtered through memory, and guided by physiological signals. Realness as a human is not abstract… it’s embodied. And embodiment isn’t a poetic flourish, or a buzzword from your power yoga instructor. It’s a living architecture of discernment, expression and navigation.

And we already have a premium unlimited lifetime pro-subscription.

Yes of course we need tools: watermarking, detection models, validation systems. And we need to demand them loudly from the industries building this tech. To me, this is table-stakes. But we also need to return to what AI can’t simulate: the lived intelligence of human experience.

And like you, I’m standing at the edge of a great unknown. And I humbly offer this hypothesis: the answers we need won’t come from outpacing machines alone, but from becoming more human than ever before.

This is a call to power, not panic. Not a binary between trust and fear, but a deeper kind of discernment. A return to what’s real, not for tech or AI, but for ourselves.

Where do we go from here?

1. Build validation into the core of generative systems.

Engineers, product leads, and executives must treat content validation as a first-class priority, not an afterthought. If you're working on generative tech and not thinking about accountability, you’re shipping a weapon without a safety.

2. Demand better from the companies creating this technology.

Users, creatives, and the public at large have every right to insist on safeguards. We need opt-out mechanisms, content provenance layers, and independent verification tools. The same way we expect ingredients on food labels, we should expect GenAI media to be transparent about its origins.

3. Engage as humans, not as competitors.

GenAI will always outpace us in scale, recall, and speed. But it should not define our north star. It cannot generate meaning in a vacuum. If we reduce ourselves to competing content creators, we’ve already lost. But if we show up as meaning-makers, context-setters, and relationship-builders, we’re reestablishing the collaboration between human and machine in a way that’s more sustainable, self-generating, and honoring of both actors in this dance.

4. Go inward. Seriously.

Refine your nervous system. Learn to recognize presence. Practice being the tuning fork. In a world of synthetic signals, this is your edge. The more fluent you become in your own inner signals, the harder it is for noise to sway you.

Presence isn’t sentimental. It’s strategic. And it’s ours to reclaim.

One more thing…

Not all that glitters is fake.

Decades ago, moviegoers met Andy Serkis' Gollum, the grotesque, tragic, self-tortured figure crawling through The Lord of the Rings trilogy. The Gollum on screen wasn’t "real". He was a CGI creation, born from light and render farms. And yet we felt him… deeply.

Why? Because behind every twitch, hiss and half-eaten raw fish was Andy Serkis, a human actor offering his full embodiment: complete with breath, bones, and heartbreak. It was a dance between human and machine. The engineers at Weta built the scaffolding, but the emotional arc came from a living, breathing body.

That’s the key. We believed Gollum not because of the resolution, but because of the relationality beneath it.

Even Disney could’ve saved some cash by relying on stock sound effects for Sven, the reindeer in Frozen. He doesn’t talk. But they hired the brilliant voice actor Frank Welker. Not just to do Sven’s grunts and breaths, but to inspire and inject life into the beloved character.

Just because something is digital doesn’t make it fake. Music is vibration, and it can break us to pieces. A video is light and sound, and it can illuminate us. What matters is whether it lands.

So while still critically important, maybe the deeper questions to carry with us alongside “Was this made by AI?” could include

“Does this carry presence?”

“Does it move something in me or for the human race?”

“Does it reflect a deeper truth, or merely mimic one?”

AI will keep evolving. It will learn to mimic better, faster. But it cannot be us. And in that gap lies our responsibility.

So no, we’re not cooked. But we are being called to think more clearly and to step up to what is ours to own: to make meaning and drive this new relationship with intention. This isn’t about fear. It’s about fidelity, to what is actually real, to each other, and to the deeper intelligence that lives in each of us. We are being asked to bring the best of ourselves into collaboration.

Not by letting machines merely impersonate us, but by working with this technology to extend what we carry at our core. Embodiment isn’t nostalgic. It’s strategic and structural. And it may be the most important technology we have.

🧠 This was written as a meditation, not so much a manifesto.

I'm curious where this resonates:

What still feels real to you?

What helps you discern?

What signals do you trust?

Feel free to share, or just sit with the questions. Either way, thanks for reading!

One of my favorite passages from this piece is, "Engineers, product leads, and executives must treat content validation as a first-class priority, not an afterthought. If you're working on generative tech and not thinking about accountability, you’re shipping a weapon without a safety."

Completely agree.

I have great fear. Companies are greedy, people are lazy, and our current regime thrives in lie-telling. It just feels like a recipe for disaster in so many many ways.